As AR and VR devices become a bigger part of how we work and play, how do we maintain seamless social connection between real and virtual worlds? In other words, how do we maintain “social co-presence” in shared spaces among people who may or may not be involved in the same AR/VR experience?

This year at SIGGRAPH, Facebook Reality Labs (FRL) Research will present a new concept for social co-presence with virtual reality headsets: reverse passthrough VR, led by research scientist Nathan Matsuda. Put simply, reverse passthrough is an experimental VR research demo that allows the eyes of someone wearing a headset to be seen by the outside world. This is in contrast to what Quest headsets can do today with Passthrough+ and the experimental Passthrough API, which use externally facing cameras to help users easily see their external surroundings while they’re wearing the headset.

Over the years, we’ve made strides in enabling Passthrough features for Oculus for consumers and developers to explore. In fact, the idea for this experimental reverse passthrough research occurred to Matsuda after he spent a day in the office wearing a Quest headset with Passthrough, thinking through how to make mixed reality environments more seamless for social and professional settings. Wearing the headset with Passthrough, he could see his colleagues and the room around him just fine. But his colleagues couldn’t see him without an external display. Every time he attempted to speak to someone, they remarked how strange it was that he wasn’t able to make eye contact. So Matsuda posed the question: What if you could see his eyes — would that add something to the social dynamic?

When Matsuda first demonstrated reverse passthrough for FRL Chief Scientist Michael Abrash in 2019, Abrash was unconvinced about the utility of this work. In the demo, Matsuda wore a custom-built Rift S headset with a 3D display mounted to the front. On the screen, a floating 3D image of Matsuda’s face, crudely rendered from a game engine, re-created his eye gaze using signals from a pair of eye-tracking cameras inside the headset.

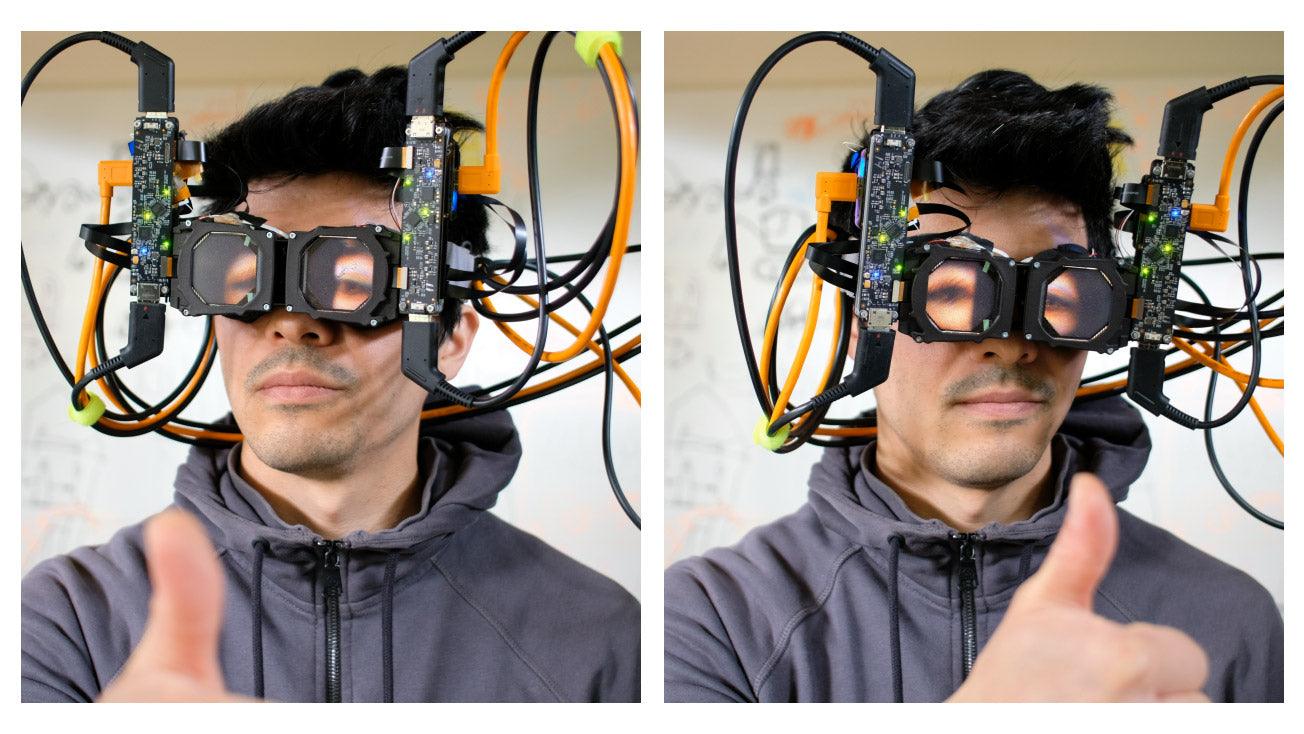

Research scientist Nathan Matsuda wears an early reverse passthrough prototype with 2D outward-facing displays. Right: The first fully functional reverse passthrough demo using 3D light field displays.

“My first reaction was that it was kind of a goofy idea, a novelty at best,” said Abrash. “But I don’t tell researchers what to do, because you don’t get innovation without freedom to try new things, and that’s a good thing, because now it’s clearly a unique idea with genuine promise.”

Nearly two years after the initial demo, the 3D display technology and research prototype have evolved significantly, featuring purpose-built optics, electronics, software, and a range of supporting technologies to capture and depict more realistic 3D faces. This progress is promising, but this research is clearly still experimental: Tethered by many cables, it’s far from a standalone headset, and the eye and facial renderings are not yet completely lifelike. However, it is a research prototype designed in the spirit of FRL Research’s core ethos to run with far-flung concepts that may seem a bit outlandish. While this work is nowhere near a product roadmap, it does offer a glimpse into how reverse passthrough could be used in collaborative spaces of the future — both real and virtual.

Left: A VR headset with the external display disabled, representing the current state of the art. No gaze cues are visible through the opaque headset enclosure. Middle: A VR headset with outward-facing 2D displays, as proposed in prior academic works[1][2][3][4]. Some gaze cues are visible, but the incorrect perspective limits the viewer’s ability to discern gaze direction. Right: Our recent prototype uses 3D reverse passthrough displays, showing correct perspective for multiple external viewers.

Reverse passthrough

The essential component in a reverse passthrough headset is the externally facing 3D display. You could simply put a 2D display on the front of the headset and show a flat projection of the user’s face on it, but the offset from the user’s actual face to the front of the headset makes for a visually jarring, unnatural effect that breaks any hope of reading correct eye contact. As the research prototype evolved, it was clear that a 3D display was a better direction, as it would allow the user’s eyes and face to appear at the correct position in space on the front of the headset. This depiction helps maintain alignment as external viewers move in relation to the 3D display.

There are several established ways to display 3D images. For this research, we used a microlens-array light field display because it’s thin, simple to construct, and based on existing consumer LCD technology. These displays use a tiny grid of lenses that send light from different LCD pixels out in different directions, with the effect that an observer sees a different image when looking at the display from different directions. The perspective of the images shift naturally so that any number of people in the room can look at the light field display and see the correct perspective for their location.

As with any early stage research prototype, this hardware still carries significant limitations: First, the viewing angle can’t be too severe, and second, the prototype can only show objects in sharp focus that are within a few centimeters of the physical screen surface. Conversations take place face-to-face, which naturally limits reverse passthrough viewing angles. And the wearer’s face is only a few centimeters from the physical screen surface, so the technology works well for this case — and will work even better if VR headsets continue to shrink in size, using methods such as holographic optics.

Building the research prototype

FRL researchers used a Rift S for early explorations of reverse passthrough. As the concept evolved, the team began iterating on Half Dome 2 to build the research prototype presented this year at SIGGRAPH. Stripping down the headset to the bare display pod, mechanical research engineer Joel Hegland provided a roughly 50-millimeter-thick VR headset to serve as a base for the latest reverse passthrough demo. Then, optical scientist Brian Wheelwright designed a microlens array to be fitted in front.

The resulting headset contains two display pods that are mirror images of each other. They contain an LCD panel and lens for the base VR display. A ring of infrared LEDs illuminates the part of the face covered by the pod. A mirror that is reflective only for infrared light sits between the lens and screen, so that a pair of infrared cameras can view the eye from nearly head-on. Doing all this in the invisible infrared band keeps the eye imaging system from distracting the user from the VR display itself. Then the front of the pod has another LCD with the microlens array.

Up: A cutaway view of one of the prototype display pods. Down: The prototype display pod with driver electronics, prior to installation in the full headset prototype.

Imaging eyes and faces in 3D

Producing the interleaved 3D images to show on the light field display presented a significant challenge in itself. For this research prototype, Matsuda and team opted to use a stereo camera pair to produce a surface model of the face, then projected the views of the eye onto that surface. While the resulting projected eyes and face are not lifelike, this is just a short-term solution to pave the way for future development.

FRL’s Codec Avatars research points toward the next generation of this imaging. Codec Avatars are realistic representations of the human face, expressions, voice, and body that, via deep learning, can be driven from a compact set of measurements taken inside a VR headset in real time. These virtual avatars should be much more effective for reverse passthrough, allowing for a unified system of facial representation that works whether the viewer is local or remote.

Shown below, a short video depicts a Codec Avatar from our Pittsburgh lab running on the prototype reverse passthrough headset. These images, and their motion over time, appear much more lifelike than those captured using the current stereo camera method, indicating the sort of improvements that such a system could provide while working in tandem with remote telepresence systems.

The reverse passthrough prototype displaying a high-fidelity Codec Avatar facial reconstruction.

A path toward social co-presence in VR

Totally immersive VR and AR glasses with a display are fundamentally different technologies that will likely end up serving different users in different scenarios in the long term. There will be situations where people will need the true transparent optics of AR glasses, and others where people will prefer the image quality and immersion of VR. Facebook Reality Labs Research, under Michael Abrash’s direction, has cast a wide net when probing new technical concepts in order to move the ball forward across both of these display architectures. Fully exploring this space will ensure that the lab has a grasp on the full range of possibilities — and limitations — for future AR/VR devices, and eventually put those findings into practice in a way that supports human-computer interaction for the most people in the most places.

Reverse passthrough is representative of this sort of work — an example of how ideas from around the lab are pushing the utility of VR headsets forward. Later this year, we’ll give a more holistic update on our display systems research and show how all this work — from varifocal, holographic optics, eye tracking, and distortion correction to reverse passthrough — is coming together to help us pass what we call the Visual Turing Test in VR.

Ultimately, these innovations and more will come together to create VR headsets that are compact, light, and all-day wearable; that mix high-quality virtual images with high-quality real-world images; and that let you be socially present with anyone in the world, whether they’re on the other side of the planet or standing next to you. Making that happen is our goal at Facebook Reality Labs Research.

Leave a comment

All comments are moderated before being published.

This site is protected by hCaptcha and the hCaptcha Privacy Policy and Terms of Service apply.